The rising importance of AI ethics and data privacy compliance

Artificial intelligence (AI) is revolutionizing industries, offering unprecedented efficiencies, insights, and automation. However, as AI systems increasingly process vast amounts of personal data, concerns about ethical AI use and privacy compliance are growing. Businesses must align AI ethics with data privacy laws to foster trust, reduce legal risks, and maintain compliance with evolving regulations like the GDPR and the California Consumer Privacy Act (CCPA). To support organizations in managing these risks effectively, TrustArc Arc provides a unified privacy management platform that centralizes assessments, policy governance, and compliance workflows.

Failure to integrate ethical AI practices and privacy safeguards can result in significant consequences, including legal penalties, reputational damage, and loss of customer trust. Organizations leveraging AI must adopt responsible governance frameworks that align with privacy laws while prioritizing fairness, transparency, and accountability in AI decision-making. By prioritizing ethical AI development, businesses can create robust AI solutions that respect individual rights and uphold regulatory requirements while driving innovation and efficiency.

What is ethical AI, and why it’s critical for your organization

Ethical AI involves developing and deploying artificial intelligence systems that adhere to fairness, accountability, transparency, and data protection principles. These principles help prevent AI systems from reinforcing biases, exploiting user data, or operating in ways that harm individuals or society.

By implementing ethical AI frameworks, organizations can:

- Reduce the risk of algorithmic bias and discrimination

- Improve transparency in AI-driven decision-making

- Align AI systems with legal and regulatory requirements

- Foster consumer confidence and trust

- Strengthen brand reputation by demonstrating responsible AI practices

AI governance structures, such as the EU AI Act and NIST AI Risk Management Framework, emphasize the importance of ethical AI, reinforcing that businesses must integrate responsible AI practices to stay compliant and competitive. Companies proactively implementing ethical AI frameworks are more likely to gain a competitive edge in an increasingly AI-driven marketplace.

AI ethics and data privacy: Key challenges organizations must address

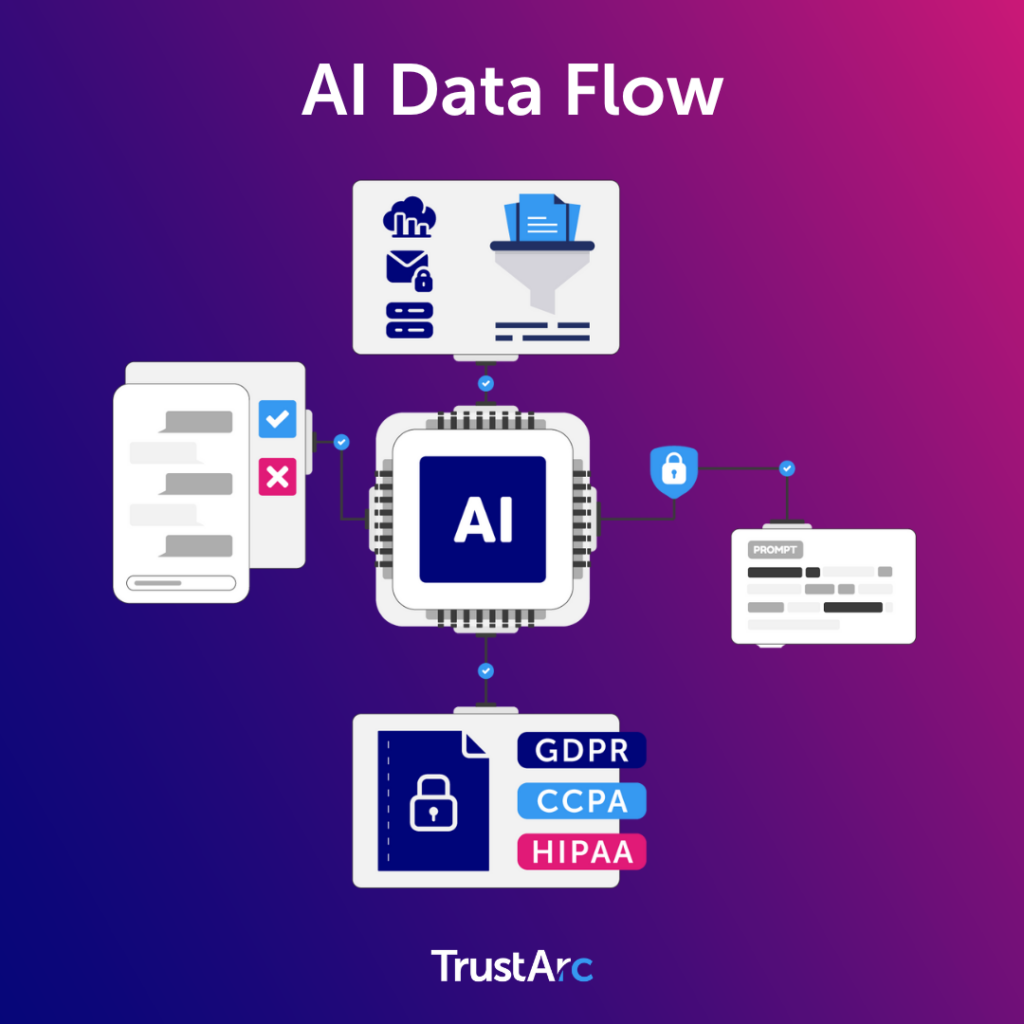

Data privacy safeguards individuals’ personal information from unauthorized access, use, or disclosure. AI systems, particularly those trained on large datasets, present unique privacy challenges, such as:

- Bias and fairness: AI models can inadvertently reinforce discrimination if trained on biased datasets.

- Transparency: Many AI algorithms operate as “black boxes,” making it difficult to understand how they arrive at decisions.

- Accountability: Organizations must take clear responsibility for AI-driven decisions.

- Security risks: AI systems handling sensitive personal data are potential targets for cyber threats.

- Regulatory compliance: Governments worldwide are enacting strict AI and data privacy regulations that businesses must adhere to.

Businesses must adopt robust data privacy frameworks, conduct AI impact assessments, and integrate human oversight in AI-driven processes to mitigate these risks. Implementing a culture of responsible AI governance will enable organizations to proactively address privacy concerns and mitigate potential risks before they escalate.

Best practices to strengthen data privacy compliance in AI systems

Organizations can safeguard data privacy in AI systems by implementing key best practices:

Strengthen AI ethics with data minimization and secure data storage

Data minimization for better AI ethics and privacy protection

Businesses should limit data collection to only what is necessary for the AI system’s intended function. Implementing strict data governance policies reduces privacy risks and ensures compliance with regulations. Data minimization also enhances system efficiency by reducing redundant or unnecessary data processing.

Secure data storage to support data privacy compliance

Organizations must protect personal data through encryption, strict access controls, and regular security audits. Ensuring that only authorized personnel can access sensitive data helps prevent breaches and regulatory violations. Additionally, implementing multi-factor authentication (MFA) and other cybersecurity measures can enhance data protection.

Use AI-driven tools to improve data privacy compliance

Automating compliance to support ethical AI

AI-powered compliance solutions can streamline privacy management by automating consent tracking, data access requests, and compliance reporting. These tools help organizations maintain regulatory compliance while reducing manual workload. By leveraging AI for compliance automation, businesses can improve efficiency and accuracy in privacy management. The TrustArc Privacy Management Platform can support these workflows by helping teams manage assessments and privacy tasks more efficiently.

Building customer trust through transparent data privacy practices

Transparency in AI and data privacy practices is essential for building consumer trust. Businesses should:

- Clearly communicate how AI systems process user data.

- Provide accessible privacy policies detailing data collection, storage, and usage.

- Implement explainable AI techniques that allow users to understand AI-driven decisions.

- Establish clear channels for consumers to exercise their data rights, such as opting out of automated processing.

One compelling example of transparency in action is Integral Ad Science (IAS), which has set a new standard for responsible AI in digital advertising. By prioritizing transparency, IAS has built a robust framework that fosters trust among stakeholders and ensures ethical AI deployment. Their approach enhances ad performance and aligns with broader industry demands for accountability and fairness. Learn more about IAS’s commitment to transparency and how they are shaping the future of digital marketing.

By demonstrating transparency in AI operations, organizations can foster stronger relationships with customers and help them feel in control of their personal data.

Ethical AI development: How to build fair, accountable, and responsible AI

Ethical AI development involves designing AI systems that prioritize fairness, inclusivity, and accountability. Key strategies include:

- Bias mitigation: Regularly auditing AI models to identify and reduce bias.

- Human oversight: Assigning responsible personnel to monitor and review AI decisions when necessary.

- Diverse AI teams: Including professionals from varied backgrounds to promote inclusive AI development.

- Ongoing AI ethics training: Providing employees with the knowledge and resources to develop and deploy AI responsibly.

Conducting data privacy audits to ensure AI ethics and compliance

Regular privacy audits help businesses identify compliance gaps and improve AI governance. Steps include:

- Data inventory: Mapping all AI-related data collection and processing activities.

- Risk assessment: Evaluating potential privacy risks associated with AI models.

- Policy review: Updating internal policies to align with evolving AI regulations.

- Third-party compliance checks: Evaluating vendors and third-party AI solutions to confirm they meet ethical AI and data privacy standards.

TrustArc solutions offer comprehensive tools to facilitate privacy audits, helping organizations comply with industry standards and regulations.

The future of AI ethics and data privacy compliance in a regulated world

As AI regulations continue to evolve, businesses must proactively adapt to new privacy requirements. Trends shaping the future of AI ethics and privacy compliance include:

- AI-driven privacy solutions that automate compliance and risk management.

- Decentralized data models that give users more control over their data.

- Stronger global AI regulations shaping responsible AI deployment.

- Increased collaboration between AI developers and regulators to establish ethical AI guidelines.

Improve your AI ethics strategy and strengthen data privacy compliance

Businesses must take proactive steps to align AI ethics with privacy compliance. TrustArc offers AI-driven compliance solutions that streamline data privacy management, reduce risk, and build consumer trust. Organizations prioritizing ethical AI now will be better positioned for future regulatory changes and consumer expectations.

Responsible AI checklist for ethical AI

Use this checklist to align your AI systems with common compliance standards.

Access the checklistDemonstrate ethical AI and transparent data governance

Publicly show that your AI data governance is accountable, fair, and transparent.

Get certifiedFAQs on AI ethics and data privacy compliance

Why is data privacy a concern with AI?

AI systems often process large volumes of personal data, increasing risks related to misuse, bias, and lack of transparency. Addressing AI alignment with privacy laws mitigates these concerns and protects user rights.

How can AI systems be discriminatory?

Algorithmic bias can arise from unrepresentative training data, leading to discriminatory outcomes. Implementing fairness assessments, diverse datasets, and bias-mitigation techniques can help reduce discrimination risks.

What are key AI-related data privacy regulations businesses should be aware of?

Key regulations include the EU AI Act, GDPR, CCPA, and the Colorado AI Act, all of which impose obligations on AI data processing and privacy compliance.

By integrating ethical AI principles with strong privacy practices, businesses can foster trust, reduce risks, and ensure compliance in the rapidly evolving AI landscape.

How does the General Data Protection Regulation (GDPR) impact AI ethics and data privacy compliance?

The General Data Protection Regulation (GDPR) places strict requirements to ensure transparency, fairness, and accountability in AI systems. It restricts how personal data can be used, mandates safeguards against bias, and gives users rights over automated decision-making directly reinforcing ethical AI practices.

What does the California Consumer Privacy Act (CCPA) require from companies using AI systems?

CCPA gives consumers control over how their data is used in AI, requiring companies to disclose data practices, provide opt-out options, and prevent unauthorized data sharing. These obligations help ensure AI systems operate transparently and responsibly.

How can organizations manage AI privacy compliance more efficiently?

Organizations can streamline AI privacy compliance by using structured governance processes and tools that centralize assessments, documentation, and risk monitoring. The TrustArc Privacy Management Platform helps teams stay aligned with evolving regulations and maintain clearer oversight of how AI systems handle personal data.