Embracing the AI revolution responsibly

Artificial Intelligence (AI) stands as a testament to human innovation, driving businesses towards unparalleled heights of efficiency and creativity. Many enterprises are rushing to integrate AI into their core operations, be it through in-house development, commercial vendors, or open-source communities. Others are being circumspect, waiting for the hype to wane and treading carefully with its application to their business and its use.

While AI undoubtedly offers transformative potential, TrustArc’s 2023 Global Privacy Benchmarks Survey reveals why cautious optimism is warranted.

Our global, 360° view of how enterprises manage data protection and privacy identified 18 key challenges that companies often face with respect to privacy. Top-ranked among these was AI, followed by regulatory compliance risks and reputational risks from social media. It was also informative that AI also was seen as important or very important with respect to privacy concerns by three quarters of the respondents.

Like all transformative technologies, AI comes with substantial caveats. An integral part of the AI equation is its interaction with Personally Identifiable Information (PII) and sensitive data. The dilemma? The balance between harvesting the rewards of AI and understanding its inherent risks.

The list of vulnerabilities AI exposes is becoming more widely known, but from a privacy lens, it bears reviewing.

Vulnerabilities caused by AI

Data poisoning: The intentional corruption of training data can mislead AI models. When it comes to privacy, malicious actors might taint data to produce outcomes that compromise user confidentiality or misrepresent user data.

Model theft: Stealing the parameters or architecture of an AI model can expose the data used to train it, as well as the logic behind its decisions. This can lead to privacy breaches, intellectual property theft, or unfair competition.

Model explain-ability, transparency, and accountability: AI models can be complex, opaque, or unpredictable, making it difficult to explain how they process data and reach decisions. If we don’t understand how an AI model reaches its conclusions, it undermines user consent, control, and recourse, as well as interfering with achieving regulatory obligations such as data protection impact assessments (DPIAs) and the right to explanation.

Bias and discrimination: AI models can reflect or amplify human biases and prejudices, resulting in unfair or discriminatory outcomes for certain groups of users. This can harm user trust and reputation, by inadvertent exposure of specific groups’ data or the unintentional highlighting of sensitive patterns. Violations of anti-discrimination laws may occur when models are trained based on biased data sets.

Model security: An inadequately secured AI model becomes a target. Any breach in the cybersecurity measures guarding the data used to train AI models can lead to data breaches and compromised user privacy.

False results, aka AI “hallucinations”: While AI models quite often produce fabricated or outdated results, right or wrong, they are presented with compellingly written, confidence inspired prose to the user. Decisions based on these inaccuracies can easily lead to stakeholders having their privacy breached.

These risks highlight the need for a robust and proactive approach to responsible AI governance, one that ensures that AI models are designed, developed, and deployed in a responsible and ethical manner.

How mature is your AI risk management? Take the quiz.

Getting prepared: Updated privacy frameworks and principles in an AI world

The ability to track privacy program progress in the new AI landscape is essential. The frameworks and principles to guide through this evolving AI landscape can be found in TrustArc’s PrivacyCentral, including:

- NIST AI Risk Management Framework – a U.S. framework with the aim to offer new guidance “…to cultivate trust in AI technologies and promote AI innovation while mitigating risk”.

- OECD AI principles – promoting the “use of AI that is innovative and trustworthy and that respects human rights and democratic values.”

- Nymity Privacy Management Accountability Framework™ – ensuring AI is being developed, implemented, and used in a privacy-friendly, non-discriminatory, transparent, and accountable manner by evaluating and measuring privacy compliance across frameworks and standards.

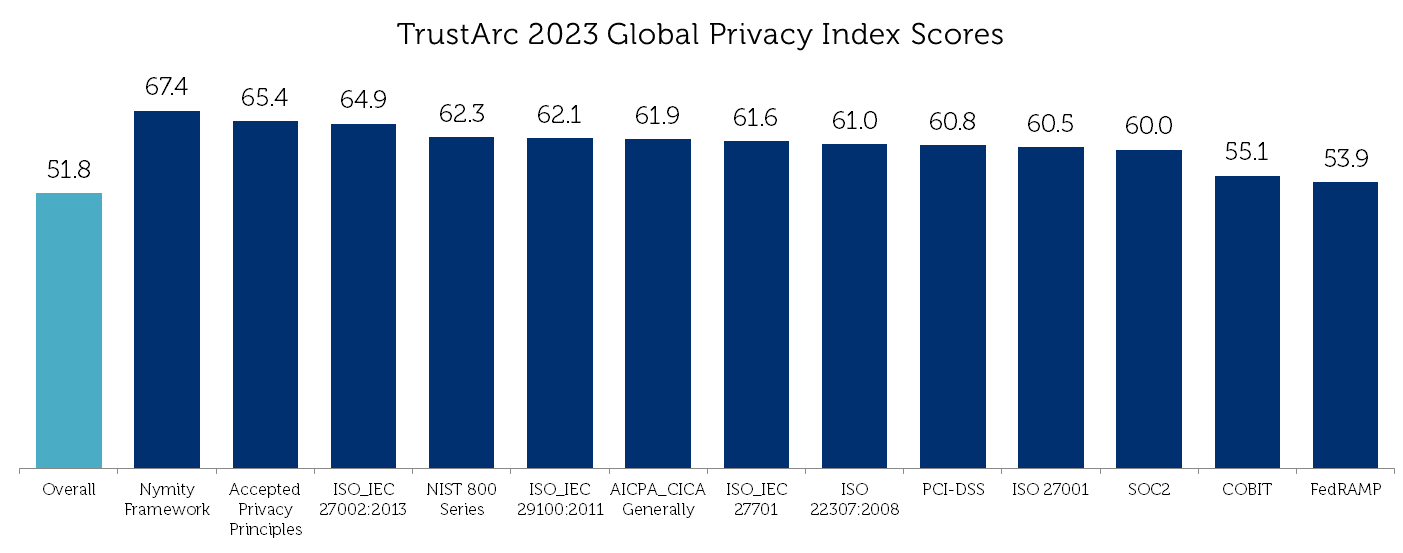

Among 13 standards listed in our most recent global survey, it was noteworthy that adoption of the Nymity Framework was associated with the highest Privacy Index competency scores.

The global recognition of AI’s potential and risks has led to a surge in proposed government regulations and standards. TrustArc’s in-house privacy and legal experts continuously review, map, and update laws and standards, including industry standard AI frameworks/principles, doing this work for you.

Proposed AI regulations and standards

In addition to US national guidelines in NIST, seven US states are quickly adopting new AI regulations, and several new global regulations are taking shape. These include:

EU and UK AI Acts: These acts aim to regulate AI’s various uses to ensure user safety and data privacy.

Canada’s AIDA: This Act will likely stress responsible AI adoption, emphasizing the protection of user data and ensuring ethical AI use.

One key tool in preparing for the new regulatory landscape is an updated approach to Privacy Impact Assessments (PIAs).

You got this: Recognizing the familiarity of Privacy Impact Assessments

Privacy Impact Assessments (PIAs) are an essential tool for privacy professionals. These systematic processes help identify and mitigate the privacy risks of a project, system, or process that involves personal data. They help organizations comply with data protection laws, demonstrate accountability, and build trust with users and stakeholders. PIAs are not only a legal requirement in many jurisdictions but also a best practice for privacy by design.

For privacy professionals familiar with conducting Privacy Impact Assessments (PIAs), the AI challenge might seem daunting. Still, the underpinnings remain rooted in assessing impacts, a process most are already adept at. The good news is the methodologies behind PIAs can be leveraged and extended to evaluate AI’s implications, be it ethical concerns, algorithmic biases, or other pertinent issues.

However, traditional PIAs may not be sufficient to address the unique challenges posed by AI. AI models often involve large volumes of data, complex processing operations, dynamic changes, and uncertain outcomes. These factors can make it difficult to assess the privacy impacts of AI using conventional methods and frameworks.

Therefore, organizations need to elevate their PIAs to account for the specific characteristics and risks of AI. This means adapting their PIA methodology, scope, criteria, and documentation to reflect the nature and context of AI. It also means involving relevant stakeholders from different disciplines and perspectives, such as data scientists, engineers, ethicists, lawyers, and users.

Conduct impact assessments to mitigate AI risk

In response, TrustArc has expanded its industry-leading Assessment Management workflow capabilities that empower organizations to conduct impact assessments to mitigate AI risk. Assessments include a pre-built, out-of-the-box AI Risk Assessment template based on the NIST AI framework, which can also be configured based on an organization’s tailored requirements. The AI governance features are integrated within TrustArc’s existing product offerings at no additional cost.

These include:

- AI, DPIA/PIA, self-configurable ethics assessments geared toward AI

- AI-algorithm based risk scoring based on standards and laws, including NIST AI

- Pre-built AI Risk Assessment template based on NIST

- Ability to create workflows with Automation Rules. For example, for certain processing types a rule can be configured to trigger a PIA or AI assessment

Address privacy management in AI

Additional Operational Templates have also been added to the Nymity platform to address privacy management in AI. The Operational Templates product includes over 1000 templates – from sample privacy policies, privacy notices, pre-built PIA and DPIA templates, information security checklists, sample incident response plans, procurement checklists, template contract language, sample consent language, marketing checklists, and job descriptions and plans for building privacy networks.

To support AI initiatives from the perspective of privacy, the following resources and templates have been added, among other updates:

- An explanatory list of key AI definitions and concepts

- A guide of Key Considerations for Building an AI Strategy

- A guide for Incorporating Ethical Principles and Human-Centered Values to AI systems

- A whitepaper on Organizational and Technical Considerations for Algorithmic Accountability

- An Algorithm Impact Assessment to assess the impact of AI and weigh its benefits against the risks

- A Checklist for Adhering to Ethical AI Principles

Effectively manage privacy program governance

Overall, these updates ensure there’s an emphasis on broadening PIAs to include assessments tailored for AI, encapsulating algorithmic and ethical impact evaluations, as well as understanding how to take action to mitigate the risks and reduce harmful impacts. By updating PIAs with an AI focus, companies can manage and mitigate privacy risks with AI systems and effectively manage privacy program governance in an emerging AI technology environment.

Current product updates help organizations with the following:

- Learning about and understanding what AI is and aligning this knowledge from a data governance, privacy, security, and compliance perspective;

- Setting out actions on AI Governance through a combination of a principle-based approach and an evidence-based approach that maps the data privacy risk layers of AI; and

- Operationalizing an organization’s use of AI in adherence to recognized AI principles and standards.

The application of these expanded features is key to staying ahead of expected regulatory changes.

TrustArc is the only platform that has assessments for standards readiness to know how compliant companies are and to measure progress toward AI data compliance. These come with on-demand executive-level reporting to demonstrate compliance.

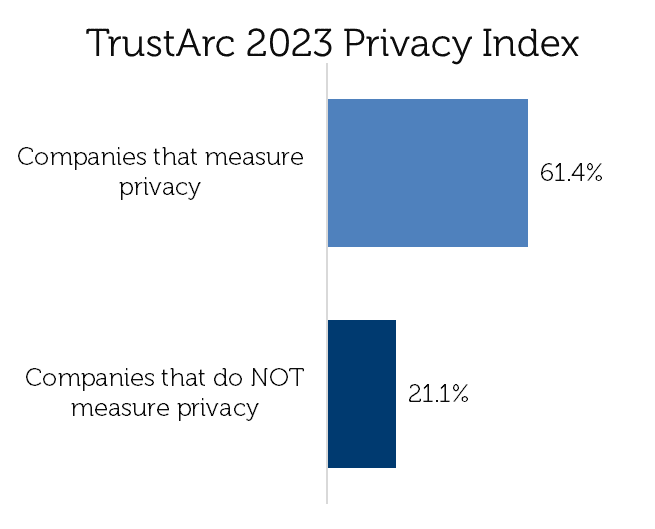

The need for measurement is essential. In our recent global survey, we asked: “Does your company currently measure the effectiveness of its privacy program?” The difference in privacy competence between companies that measure privacy versus those that do not was startling but not surprising.

Privacy competence for those that measure their effectiveness was 3x higher than those that do not and a full 10 percentage points above the overall global average.

Decoding AI Governance

Discover key pillars of AI risk governance and how to implement them effectively to build a strong, ethical AI ecosystem.

Download the ebookManage AI Risk

Improve AI governance and simplify your privacy program management.

Talk to an expertThe AI governance journey: Are you prepared?

In the absence of stringent regulations, many corporations are proactively shaping their AI ethics. These include incorporating AI into risk management and privacy governance frameworks and may involve building out specific AI ethics standards and creating employee AI training programs for its appropriate use.

The AI revolution is here, and its implications on data privacy are profound. While the landscape may be unfamiliar, organizations can find solace in the fact that the foundational principles of impact assessments remain consistent. By leveraging existing PIA processes and embracing the new tools and frameworks available, businesses can confidently stride into the AI era, ensuring they reap its rewards while upholding the highest standards of data privacy.

TrustArc is committed to guiding organizations through this journey. We continuously innovate, keeping a pulse on regulatory dynamics and ensuring our clients remain ahead of the curve. With over 22 in-house privacy and legal experts, a library rich with standards, and more than 1000 operational templates, TrustArc stands as the beacon for businesses navigating the AI-infused data privacy terrain.

Whether it’s understanding AI vulnerabilities, staying updated with regulations, or proactively setting up AI ethics frameworks, companies have a responsibility to navigate this domain with caution and integrity. TrustArc is dedicated to facilitating the responsible and transparent use of AI.

As AI regulations emerge, trust in our commitment to support compliance, ensuring that organizations remain well-equipped in this ever-evolving journey.